The EU AI Act Newsletter #51: Final Text Published

The European Parliament publishes the corrigendum of the AI Act text, amending major errors in the language.

Welcome to the EU AI Act Newsletter, a brief biweekly newsletter by the Future of Life Institute providing you with up-to-date developments and analyses of the proposed EU artificial intelligence law.

Legislative Process

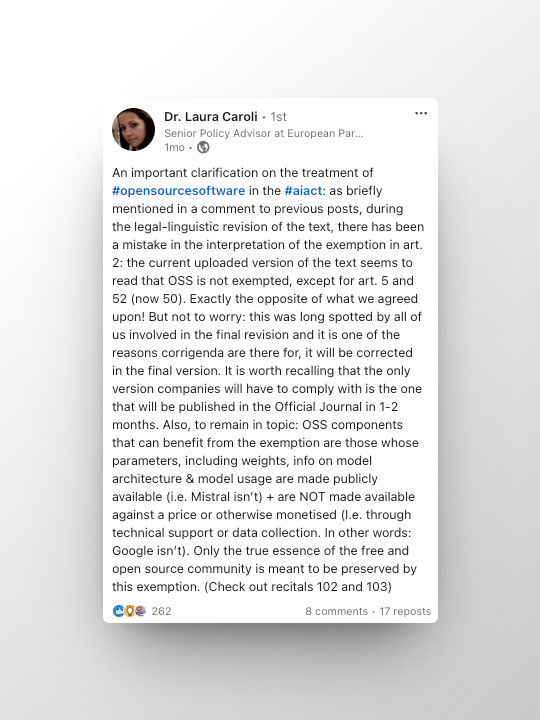

Final AI Act text: On 13 March 2024, the European Parliament adopted the AI Act at a first reading. Now, the corrigendum of the text has been released. This document corrected the language in the Act. For example, see the following clarification by Laura Caroli, the Senior Policy Advisor at the European Parliament, on open-source AI:

Analyses

Joint statement by creative workers' organisations: A coalition of creative industry organisations representing writers, performers, composers, and more issued a statement regarding the AI Act. They welcome the Act's provisions for general-purpose AI, particularly those mandating compliance with EU copyright law and data transparency. The signatories emphasise that the Act still needs to be implemented effectively at the EU and national level. Concerns are raised over the Act's handling of text and data mining exceptions, which fail to adequately address the use of generative AI models. In the coalition's view, such exceptions were not conceived with large scale generative AI models in mind. They argue that despite existing EU directives, creators have not been adequately compensated for the use of their work in AI-generated content. The organisations urge EU policymakers to engage in comprehensive and democratic discussions to establish a legal framework that protects creators' rights and ensures fair compensation in the era of generative AI.

UK rethinks AI legislation as alarm grows over potential risks: Financial Times journalists Anna Gross and Cristina Criddle reported (unfortunately behind a paywall) on the UK government starting to craft a new legislation to put limits on the production of large language models. The plans come amidst growing concerns among regulators, including the UK competition authority, regarding the potential negative impacts of such models. Gross and Criddle note that the EU has adopted a more stringent stance on AI than the UK, with the European Parliament recently endorsing some of the initial and most stringent regulations through the AI Act. They state that AI startups have voiced concerns about the EU's regulations, viewing them as excessive oversight that might impede innovation. Allegedly, this tough legislation has led other nations, such as Canada and the United Arab Emirates, to entice some of Europe's most promising tech firms to consider relocating.

AI Act in the medicinal product lifecycle: The European Federation of Pharmaceutical Industries and Associations (EFPIA) issued a statement on the implementation of the AI Act in the pharmaceutical sector, noting AI's increasing role in medicine development. They stress the need for AI regulations to be fit-for-purpose, adequately tailored and risk-based, and not to duplicate existing rules. EFPIA proposes five considerations: 1) R&D of AI-based drug development tools should qualify for the AI Act's research exemption if used solely for scientific research; 2) AI used in R&D of medicines not falling under the exemption should not be labelled "high risk" as per the Act's criteria in Article 6; 3) Additional regulation for R&D of AI is unnecessary, as existing rules for medicine development suffice; 4) EFPIA welcomes the European Medicines Agency's efforts to assess the impact of AI in R&D; and 5) AI regulation should be adaptable to various contexts, including development stages, risk assessments and human oversight.

EU’s soft touch on open-source AI: Francesca Giannaccini and Tobias Kleineidam, a current and a former researcher at Democracy Reporting International respectively, published an op-ed in The Parliament Magazine arguing that the AI Act introduces exemptions for open AI models, potentially allowing malicious actors to exploit these models to spread harmful content. Giannaccini and Kleineidam argue that unlike proprietary tools such as ChatGPT or Gemini, which restrict access to their core technology, open-source models offer modifiability, enabling developers to create harmful content at scale. The authors' testing revealed that open-source models generated harmful content with alarming credibility and creativity. Giannaccini and Kleineidam acknowledge the benefits of open-source AI, but claim that the Act's approach lacks balance, favouring open development without adequate safeguards. In their view, while proprietary models fall under strict regulations, open models enjoy broad exemptions, requiring only transparency about training data and adherence to copyright law.

AI Act's relationship with the data protection law: DLA Piper lawyers James Clark, Muhammed Demircan and Kalyna Kettas wrote an overview of the close relationship between the EU data protection law (GDPR) and the AI Act. Firstly, the Act mainly focuses on ensuring the safe development and use of AI systems rather than granting individual rights. Secondly, European data protection authorities (DPAs) were among the first regulatory bodies to enforce regulations against the use of AI systems, due to concerns around personal data and lack of transparency, among other issues. Thirdly, GDPR and the AI Act are both founded on key principles. GDPR principles include lawfulness, fairness, transparency, purpose limitation, data minimisation, accuracy, storage limitation, integrity, and confidentiality; the Act outlines general principles for all AI systems from the OECD AI Principles and the High-Level Expert Group on AI's ethical principles. Finally, the Act mandates conformity assessments, – not risk assessments but rather demonstrative tools to show compliance – whereas GDPR requires Data Protection Impact Assessments (DPIAs).

Events

The Future of Life Institute invitation: We are pleased to invite the readers of our newsletter to attend FLI’s event “Made in Europe: Generating the ecosystem of trustworthy AI innovation”. We have some great speakers joining us, including Kilian Gross, Head of Unit at the European Commission, as well as Laura Caroli, Senior Policy Advisor at the European Parliament. It will be an informal event, bringing together policymakers, civil society, industry and academia to reflect on the AI Act as a historic milestone, as we turn to making implementation and enforcement a success, while ensuring that Europe continues to lead AI governance both at home and on the international stage. The event is operating on an invitation basis. Please express your interest here by joining the waiting list.

Did you find this edition helpful? Please share it with a friend or colleague who might benefit from it. I'm always open to hearing your thoughts on the AI Act as well as feedback for the newsletter. Thank you for reading!